HTML5 has been criticised for not having a timing model of the media resource in its new media elements. This article spells it out and builds a framework of how we should think about HTML5 media resources. Note: these are my thoughts and nothing offical from HTML5 – just conclusions I have drawn from the specs and from discussions I had.

What is a time-linear media resource?

In HTML5 and also in the Media Fragment URI specification we deal only with audio and video resources that represent a single timeline exclusively. Let’s call such Web resources a time-linear media resource.

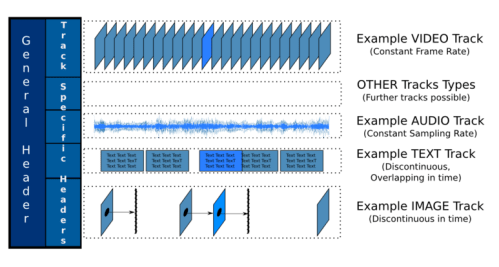

The Media Fragment requirements document actually has a very nice picture to describe such resources – replicated here for your convenience:

The resource can potentially consist of any number of audio, video, text, image or other time-aligned data tracks. All these tracks adhere to a single timeline, which tends to be defined by the main audio or video track, while other tracks have been created to synchronise with these main tracks.

This model matches with the world view of video on YouTube and any other video hosting service. It also matches with video used on any video streaming service.

Background on the choice of “time-linear”

I’ve deliberately chosen the word “time-linear” because we are talking about a single, gap-free, linear timeline here and not multiple timelines that represent the single resource.

The word “linear” is, however, somewhat over-used, since the introduction of digital systems into the world of analog film introduced what is now known as “non-linear video editing”. This term originates from the fact that non-linear video editing systems don’t have to linearly spool through film material to get to a edit point, but can directly access any frame in the footage as easily as any other.

When talking about a time-linear media resource, we are referring to a digital resource and therefore direct access to any frame in the footage is possible. So, a time-linear media resource will still be usable within a non-linear editing process.

As a Web resource, a time-linear media resource is not addressed as a sequence of frames or samples, since these are encoding specific. Rather, the resource is handled abstractly as an object that has track and time dimensions – and possibly spatial dimensions where image or video tracks are concerned. The framerate encoding of the resource itself does not matter and could, in fact, be changed without changing the resource’s time, track and spatial dimensions and thus without changing the resource’s address.

Interactive Multimedia

The term “time-linear” is used to specify the difference between a media resource that follows a single timeline, in contrast to one that deals with multiple timelines, linked together based on conditions, events, user interactions, or other disruptions to make a fully interactive multi-media experience. Thus, media resources in HTML5 and Media Fragments do not qualify as interactive multimedia themselves because they are not regarded as a graph of interlinked media resources, but simply as a single time-linear resource.

In this respect, time-linear media resources are also different from the kind of interactive mult-media experiences that an Adobe Shockwave Flash, Silverlight, or a SMIL file can create. These can go far beyond what current typical video publishing and communication applications on the Web require and go far beyond what the HTML5 media elements were created for. If your application has a need for multiple timelines, it may be necessary to use SMIL, Silverlight, or Adobe Flash to create it.

Note that the fact that the HTML5 media elements are part of the Web, and therefore expose states and integrate with JavaScript, provides Web developers with a certain control over the playback order of a time-linear media resource. The simple functions pause(), play(), and the currentTime attribute allow JavaScript developers to control the current playback offset and whether to stop or start playback. Thus, it is possible to interrupt a playback and present, e.g. a overlay text with a hyperlink, or an additional media resource, or anything else a Web developer can imagine right in the middle of playing back a media resource.

In this way, time-linear media resources can contribute towards an interactive multi-media experience, created by a Web developer through a combination of multiple media resources, image resources, text resources and Web pages. The limitations of this approach are not yet clear at this stage – how far will such a constructed multi-media experience be able to take us and where does it become more complicated than an Adobe Flash, Silverlight, or SMIL experience. The answer to this question will, I believe, become clearer through the next few years of HTML5 usage and further extensions to HTML5 media may well be necessary then.

Proper handling of time-linear media resources in HTML5

At this stage, however, we have already determined several limitations of the existing HTML5 media elements that require resolution without changing the time-linear nature of the resource.

1. Expose structure

Above all, there is a need to expose the above painted structure of a time-linear media resource to the Web page. Right now, when the <video> element links to a video file, it only accesses the main audio and video tracks, decodes them and displays them. The media framework that sits underneath the user agent (UA) and does the actual decoding for the UA might know about other tracks and might even decode, e.g. a caption track and display it by default, but the UA has no means of knowing this happens and controlling this.

We need a means to expose the available tracks inside a time-linear media resource and allow the UA some control over it – e.g. to choose whether to turn on/off a caption track, to choose which video track to display, or to choose which dubbed audio track to display.

I’ll discuss in another article different approaches on how to expose the structure. Suffice for now that we recognise the need to expose the tracks.

2. Separate the media resource concept from actual files

A HTML page is a sequence of HTML tags delivered over HTTP to a UA. A HTML page is a Web resource. It can be created dynamically and contain links to other Web resources such as images which complete its presentation.

We have to move to a similar “virtual” view of a media resource. Typically, a video is a single file with a video and an audio track. But also typically, caption and subtitle tracks for such a video file are stored in other files, possibly even on other servers. The caption or subtitle tracks are still in sync with the video file and therefore are actual tracks of that time-linear media resource. There is no reason to treat this differently to when the caption or subtitle track is inside the media file.

When we separate the media resource concept from actual files, we will find it easier to deal with time-linear media resources in HTML5.

3. Track activation and Display styling

A time-linear media resource, when regarded completely abstractly, can contain all sorts of alternative and additional tracks.

For example, the existing <source> elements inside a video or audio element are currently mostly being used to link to alternative encodings of the main media resource – e.g. either in mpeg4 or ogg format. We can regard these as alternative tracks within the same (virtual) time-linear media resource.

Similarly, the <source> elements have also been suggested to be used for alternate encodings, such as for mobile and Web. Again, these can be regarded as alternative tracks of the same time-linear media resource.

Another example are subtitle tracks for a main media resource, which are currently discussed to be referenced using the <itext> element. These are in principle alternative tracks amongst themselves, but additional to the main media resource. Also, some people are actually interested in displaying two subtitle tracks at the same time to learn translations.

Another example are sign language tracks, which are video tracks that can be regarded as an alternative to the audio tracks for hard-of-hearing users. They are then additional video tracks to the original video track and it is not clear how to display more than one video track. Typically, sign language tracks are displayed as picture-in-picture, but on the Web, where video is usually displayed in a small area, this may not be optimal.

As you can see, when deciding which tracks need to be displayed one needs to analyse the relationships between the tracks. Further, user preferences need to come into play when activating tracks. Finally, the user should be able to interactively activate tracks as well.

Once it is clear, what tracks need displaying, there is still the challenge of how to display them. It should be possible to provide default displays for typical track types, and allow Web authors to override these default display styles since they know what actual tracks their resource is dealing with.

While the default display seems to be typically an issue left to the UA to solve, the display overrides are typically dealt with on the Web through CSS approaches. How we solve this is for another time – right now we can just state the need for algorithms for track activiation and for default and override styling.

Hypermedia

To make media resources a prime citizens on the Web, we have to go beyond simply replicating digital media files. The Web is based on hyperlinks between Web resources, and that includes hyperlinking out of resources (e.g. from any word within a Web page) as well as hyperlinking into resources (e.g. fragment URIs into Web pages).

To turn video and audio into hypervideo and hyperaudio, we need to enable hyperlinking into and out of them.

Hyperlinking into media resources is fortunately already being addressed by the W3C Media Fragments working group, which also regards media resources in the same way as HTML5. The addressing schemes under consideration are the following:

- temporal fragment URI addressing: address a time offset/region of a media resource

- spatial fragment URI addressing: address a rectangular region of a media resource (where available)

- track fragment URI addressing: address one or more tracks of a media resource

- named fragment URI addressing: address a named region of a media resource

- a combination of the above addressing schemes

With such addressing schemes available, there is still a need to hook up the addressing with the resource. For the temporal and the spatial dimension, resolving the addressing into actual byte ranges is relatively obvious across any media type. However, track addressing and named addressing need to be resolved. Track addressing will become easier when we solve the above stated requirement of exposing the track structure of a media resource. The name definition requires association of an id or name with temporal offsets, spatial areas, or tracks. The addressing scheme will be available soon – whether our media resources can support them is another challenge to solve.

Finally, hyperlinking out of media resources is something that is not generally supported at this stage. Certainly, some types of media resources – QuickTime, Flash, MPEG4, Ogg – support the definition of tracks that can contain HTML marked-up text and thus can also contain hyperlinks. But standardisation in this space has not really happened yet. It seems to be clear that hyperlinks out of media files will come from some type of textual track. But a standard format for such time-aligned text tracks doesn’t yet exist. This is a challenge to be addressed in the near future.

Summary

The Web has always tried to deal with new extensions in the simplest possible manner, providing support for the majority of current use cases and allowing for the few extraordinary use cases to be satisfied by use of JavaScript or embedding of external, more complex objects.

With the new media elements in HTML5, this is no different. So far, the most basic need has been satisfied: that of including simple video and audio files into Web pages. However, many basic requirements are not being satisfied yet: accessibility needs, codec choice, device-independence needs are just some of the core requirements that make it important to extend our view of <audio> and <video> to a broader view of a Web media resource without changing the basic understanding of an audio and video resource.

This post has created the concept of a “media resource”, where we keep the simplicity of a single timeline. At the same time, it has tried to classify the list of shortcomings of the current media elements in a way that will help us address these shortcomings in a Web-conformant means.

If we accept the need to expose the structure of a media resource, the need to separate the media resource concept from actual files, the need for an approach to track activation, and the need to deal with styling of displayed tracks, we can take the next steps and propose solutions for these.

Further, understanding the structure of a media resources allows us to start addressing the harder questions of how to associate events with a media resource, how to associate a navigable structure with a media resource, or how to turn media resources into hypermedia.

Not to nitpick, but there is no stop() method, just play() and pause().

What does it mean, concretely, to “separate the media resource concept from actual files”? Adding a level of indirection? SMIL?

Silvia,

Naive question: You wrote:

“It seems to be clear that hyperlinks out of media files will come from some type of textual track. But a standard format for such time-aligned text tracks doesn

@Philip ups! I’ll remove “stop()” 🙂 .

When I talk about “separating the media resource concept from actual files” I mean what we are already talking about with captions and the source element: the tracks of a media resource may actually be in several files. The actual markup inside HTML already has an indirection, if you so want: it points to the actual files that contribute to the resource.

If you really want to compare it to SMIL, then yes, we already have similarities in HTML5 to SMIL, where we reference the contributing resources. But other than that, we need to be careful about comparing to SMIL, since SMIL allows for so many complexities that go far beyond a single linear timeline and my post is trying to clarify that this is not what we want or can work with in HTML5.

@Raphael

Yes, DFXP is one such format that supports outgoing hyperlinks. Also, DFXP has been mapped into FLV and MPEG4 (and with it QuickTime and 3GPP), so it does in fact have quite some support for the binary formats, too. It is definitely one format that turns media resources into hypermedia. SRT doesn’t provide for such. I would, however, not yet go as far as calling DFXP the one format in use by everyone – the spread just isn’t there yet and the open source tools support.

Hi Silvia, thanks for this blog post.

I always find it interesting to compare terminologies. In the Media Fragment diagram, there is a type of track composed of “discrete” media, as per the SMIL definition. By contrast, some tracks are based on “continuous” Media.

http://www.w3.org/TR/SMIL3/smil-timing.html#Timing-DiscreteContinuousMedia

http://www.w3.org/TR/SMIL3/smil-extended-media-object.html#smilMediaNS-Definitions

Time-aligned text, in particular W3C-TimedText-AFXP-DFXP-TTML (or whatever it ends-up being known as) is an interesting case. The simple internal timing model means that such media track can be seen as a linear (i.e. from a defined “begin” to a known “end” without intrinsic discontinuity) time-based resource (i.e. not frame-based) that returns a visual depending on essentially 2 input parameters: a time offset and the coordinates+dimensions of a host rendering area. Both parameters get resolved against particular reference points, such as the wallclock for time or a video rectangle for the space domain. Generally-speaking, this kind of “text media” can actually be thought of as a motion-pictures track, with similar content addressing behaviors (i.e. “give me a time offset and I will return a frame to render, in a deterministic/reproducible manner”). Contiguous “frames” of static text do not necessarily have identical durations (i.e. non-constant frame rate), so the analogy with video ends here (in SMIL, the “textstream” element is recommended instead of “text”). However, this model is also compatible with transitions (visual animation from one internal frame to the next, with no impact on the execution timeline), although DFXP only offers roll-up captioning, whereas SMIL offers much more with its library of transitions.

In my line of work (DAISY Digital Talking Books), text is primarily a static resource that is hosted within a SMIL presentation so that an audio narration can be finely-synchronized with its corresponding textual source (paragraph, sentence, word, etc.). This model also works with the reverse scenario where the text is a transcript of an original audio track, so this may also be suitable for the video+captions use-case. DAISY offers an additional structure on top of the timing and synchronization layer: hierarchical navigation, slightly richer to that of an ePUB book (table of content, page numbers, footnotes, sidebars, etc.). In this context, cross-publication navigation (i.e. outwards hyper-linking) is not a top-priority, however bookmarks and annotations are must-haves (inwards references to points or ranges within a given distribution unit).

Web-browsers offer off-the-shelf implementations of many crucial components (text rendering, navigation, etc.), so we’re keeping a close eye on the developments of HTML5 and Media Fragments. Let’s hope it doesn’t take another decade for these to be ratified by standardization bodies 🙂

Cheers, Daniel

@Daniel

Thanks for your thoughts!

In the picture, I would think that everything is “continuous” media, since everything has a relationship to the main video timeline. I agree that DFXP as aligned to a video is also “continuous” media, even if it might have gaps. It belongs into the “time-aligned text” category. “Discrete” media would only be media that has no relationship to time and just exist. BTW: video and in particular audio can also have non-constant frame rate, so it’s not that different after all.

I do believe that DAISY – just like SMIL – consists of presentations with multiple timelines and it is what DAISY needs. It would be interesting to see if the DAISY specification – just like the SMIL specification – could be implemented using HTML5 and JavaScript. With all the modern extensions of HTML5, it may well be possible to do so. I am curious as to what the limitations would be. Hierarchical navigation will certainly be implementable with Media Fragment URIs, HTML and JavaScript. Just look at the linking that is done in http://oggify.com/videos/7 – an experimental site of ours.

We’re not fully there with the standard and not fully there with implementations, but we are certainly getting closer and I hope it will all be resolvable within the next 6 months.